Contribution[Part 3] Toward a Future of Human Security and Well-being (1 of 3)History of Evolution Visible in Modern Humanity and Artificial Intelligence

Highlight

Since Article 1 of Part 1 was published in the Fall of 2022, this series has gone on to consider how the future might unfold in our time of volatility, uncertainty, complexity, and ambiguity (VUCA). Six articles have been published to date, making this the seventh. It has begun to seem that we have moved on from what was at first a form of VUCA driven by chance events to one that involves structural change in the foundations of society.

This series has taken a sequential approach to reporting on the developments currently underway that I believe to be important, with a focus on primary sources and what is happening on the ground. In truth, the issues associated with these developments are collectively emerging as the greatest challenge for the world of today. The echo-chamber effect of social media services that present information tailored to individual users and to which anyone can contribute have cut people off from primary sources, making it impossible to gain an accurate appreciation of the many problems that exist on the ground. Meanwhile, generative AI is transforming the very foundations of how information is acquired. These factors have created extensive divisions in society*1.

In Part 3, I will take an overview of these difficult times in which we live to consider in fundamental terms how we can find our way to a future that delivers human security and well-being. I do this because I believe that it will serve as a foundation for progress, combining information, control, and production and deployment [IT, operational technology (OT), and products].

In this article, I will be treating energy and entropy as a set. By doing so, I will be able to take a broad view of how evolution really operates in its most general form, from the birth of the universe when energy density was high and entropy low through to the formation of Earth’s biosphere, the process that took us from a single cell to a living environment rich in diversity, and how it is that electronic devices have been advancing so rapidly. A glimpse of the distant future can be seen in the initial conditions for evolution when life chose water and in how electrons and fields were the choice when it came to the initial conditions for the evolution of electronic devices.

Prediction of Digital Innovation

Gensai Murai (1864-1927), who served as live-in tutor for Hitachi’s founder Namihei Odaira, returned from a year-long visit to the USA in 1885 (at the age of 22). The first commercial telegraph line in America was built in 1845 and it is recorded that Abraham Lincoln (1809-1865) made good use of the technology in the presidential elections of 1864. Subsequently, Guglielmo Giovanni Maria Marconi (1874-1937) invented radio communications in 1895. On January 2 and 3, 1901, Gensai Murai published articles entitled “A Prophecy for the 20th Century” in the Hochi Shimbun newspaper. Nearly 125 years later, the accuracy of these predictions is astonishing and the second article in Part 2 of this series recounts how his analysis was even reported in a modern-day government white paper on science and technology.

The following are examples that relate to today’s information society.

Long-distance photography: “When war clouds darken the skies of Europe, a Tokyo reporter will be able by electrical means to obtain instant photographs of events despite being back at his newspaper office, and the photographs will show the scene in its natural colors.” This is a clear prediction of today’s satellite imagery and our ability to exchange images instantly across the Internet.

Convenient shopping: “People will be able to appraise distantly located goods on a photographic telephone, make transactions, and receive the goods instantly by underground delivery tubes.”

Apart from delivery not actually being by underground delivery tube, this is an accurate description of Internet shopping and makes it an accurate prediction of how commerce works on the Internet today.

In terms of the concepts they convey, these two out of 23 predictions are enough on their own to constitute a prediction of how well today’s GAFAM companies [Google, Apple, Facebook (now Meta), Amazon, and Microsoft] are doing.

It happened that Hitachi’s founder Namihei Odaira met Gensai Murai when he was still only a 14-year-old teenager (in 1887). The pair would spend the next half-century together. Following Gensai Murai’s passing in 1927, Odaira took over part of Gensai Murai’s second home in Shonan from his wife, treating it as his own second home and devoting himself to the “greater Hitachi concept.” I hope to write more about this in a forthcoming article.

Taking inspiration from the evolution of the universe and life, and from the evolution of electronic circuits wrought by human beings, I intend in this article to look beyond these and ahead to the future.

“Digital” as a Concept that has Existed for Thousands of Years

I will begin by considering what the word “digital” actually means.

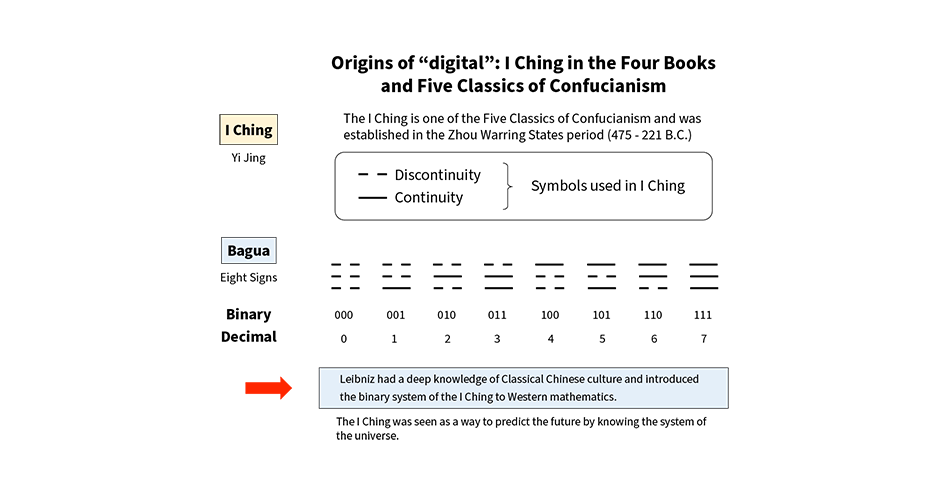

Based on the Four Books and Five Classics of Confucianism and other sources, the I Ching is said to have its origins in the Western Zhou period (1046 to 750 B.C.). It is a method used to classify nature and perform comprehensive searches for relationships using a binary-based system. It is also said to have been the basis for the binary system of Gottfried Wilhelm Leibniz (1646-1716). It also has a close affinity with the elemental reductionism of the Western philosopher René Descartes (1596-1650). While the concept of yin and yang is rooted in the cultures of Japan, China, and Korea, it also equates to the notion of a “bit.”

The Taegukgi, the national flag of the Republic of Korea, has trigrams in each of its four corners.

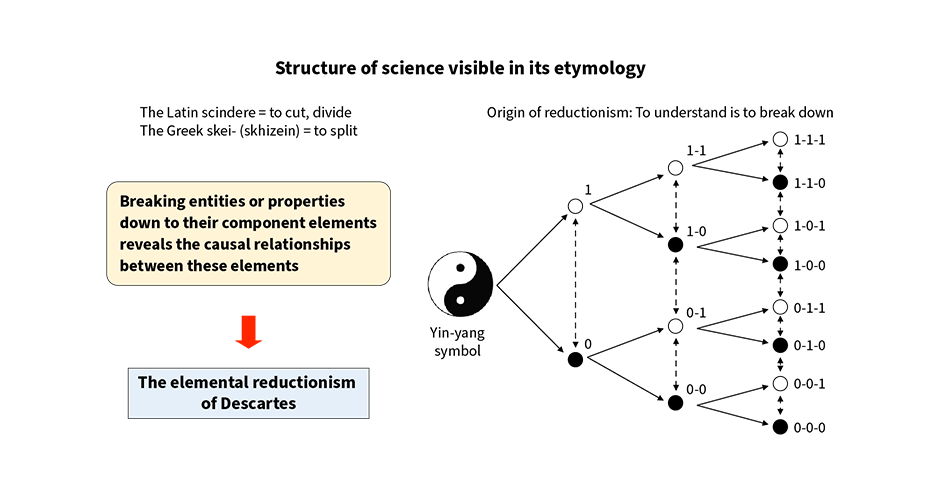

Figure 1—“Digital” and the I Ching

(A)

To understand how something works, it is necessary to break the system down to its component elements and then to put those elements back together to obtain an overview of what is happening. While Descartes is sometimes deemed an embodiment of elemental reductionism, he also noted the importance of this synthesis process of putting the elements back together. As he wrote, it is only when the deconstruction stage (reduction) is followed by a rebuilding process (synthesis) that an accurate understanding is obtained of the overall system. In this modern era in which fields are broken into different specializations and siloed from one another, this process of taking a long view and integrating all the different elements is of particular importance. Rather than elemental reductionism, I refer to this as Bird's-eye-view Integrationism. As shown in the figure, the word “science” comes from the Ancient Greek “skei-” (which gives us “sci-“) and means to gain understanding of something by breaking it down. In contrast the “-gin-” in “engineering” means “to devise.” That is, it is the action of introducing human artifacts into the natural world. This is why ethics is a concept that is needed in engineering rather than in science.

To understand how something works, it is necessary to break the system down to its component elements and then to put those elements back together to obtain an overview of what is happening. While Descartes is sometimes deemed an embodiment of elemental reductionism, he also noted the importance of this synthesis process of putting the elements back together. As he wrote, it is only when the deconstruction stage (reduction) is followed by a rebuilding process (synthesis) that an accurate understanding is obtained of the overall system. In this modern era in which fields are broken into different specializations and siloed from one another, this process of taking a long view and integrating all the different elements is of particular importance. Rather than elemental reductionism, I refer to this as Bird's-eye-view Integrationism. As shown in the figure, the word “science” comes from the Ancient Greek “skei-” (which gives us “sci-“) and means to gain understanding of something by breaking it down. In contrast the “-gin-” in “engineering” means “to devise.” That is, it is the action of introducing human artifacts into the natural world. This is why ethics is a concept that is needed in engineering rather than in science.

(B)

The I Ching is one of five philosophies that have their foundations 3000 years ago in the Western Zhou period. As a binary system that uses two symbols representing continuity and discontinuity respectively, it is the ancestor of “digital.” The traditional Chinese system of medicine also has a close relationship with the I Ching. The discovery recently that a single-celled organism was the last universal common ancestor (LUCA) of all living things on Earth has led to biological classification taking on a “phylogenetic tree” form similar to that in Figure (A). The LUCA lived approximately four billion years ago.

The I Ching is one of five philosophies that have their foundations 3000 years ago in the Western Zhou period. As a binary system that uses two symbols representing continuity and discontinuity respectively, it is the ancestor of “digital.” The traditional Chinese system of medicine also has a close relationship with the I Ching. The discovery recently that a single-celled organism was the last universal common ancestor (LUCA) of all living things on Earth has led to biological classification taking on a “phylogenetic tree” form similar to that in Figure (A). The LUCA lived approximately four billion years ago.

(J. O. Mcinerney, “Evolution: A four billion year old metabolism,” Nature Microbiology (2016))

Digital and Analog

The “digital” concept also arose out of atomism, an idea that dates back to Ancient Greece. This says that all substances have a hierarchical structure made up of indivisible particles called atoms. In modern physics, atoms are comprised of electrons, nucleons, and other elementary particles, with the 17th of these elementary particles, the Higgs Boson, having recently been discovered. Meanwhile, digital and analog are not always clearly demarcated concepts. It all depends on the level you are talking about. As is well known, elementary particles can be thought of as continuous waves depending on the context. When broken down to the micro-level signaling that goes on between neurons, our own brains work on a principle whereby results are propagated as on or off depending on whether the input signals to those neurons exceed thresholds. This is very much a digital process.

Qualitative Change in Nature of Innovation

Innovation that takes the form of information, control, and production and deployment (IT, OT, and products) has a strong relationship with the binary system, with its connection to the I Ching, and with the concept of printing that is said to have been invented in Ancient China. That modern semiconductor manufacturing equipment is based on technologically advanced printing techniques is readily apparent when you look at how semiconductor chips are made. One can say that major changes in what is meant by manufacturing arose from the concepts of binary logic and the precise printing of modules.

One can also say that these represent much of the underlying groundwork that led to generative artificial intelligence (AI).

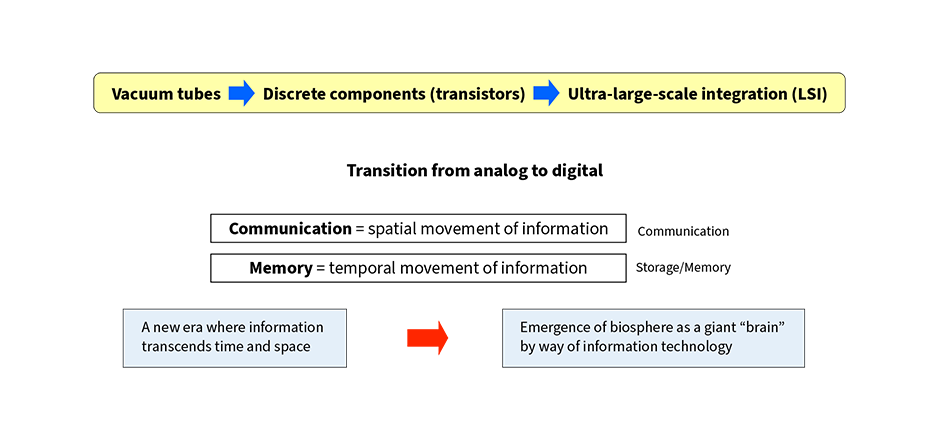

Figure 2—Qualitative Change in Innovation

In the era of vacuum tubes, devices containing filaments or plates with different designs or materials could still be given the same part designation provided their performance was equivalent. While this was a time when “manufacturing” still carried a suggestion of manual craftsmanship, the era of ultra-large-scale integration (LSI) brought the high-volume production of chips made using printing techniques. What made this possible was a qualitative change due to semiconductor devices. The spatial movement and storage of information now cover the entire world thanks to networking and to devices with a high density of integration that operate at high speed. The basis of this was the ultra-rapid movement of information at near-light-speed using electrons and fields.

In the era of vacuum tubes, devices containing filaments or plates with different designs or materials could still be given the same part designation provided their performance was equivalent. While this was a time when “manufacturing” still carried a suggestion of manual craftsmanship, the era of ultra-large-scale integration (LSI) brought the high-volume production of chips made using printing techniques. What made this possible was a qualitative change due to semiconductor devices. The spatial movement and storage of information now cover the entire world thanks to networking and to devices with a high density of integration that operate at high speed. The basis of this was the ultra-rapid movement of information at near-light-speed using electrons and fields.

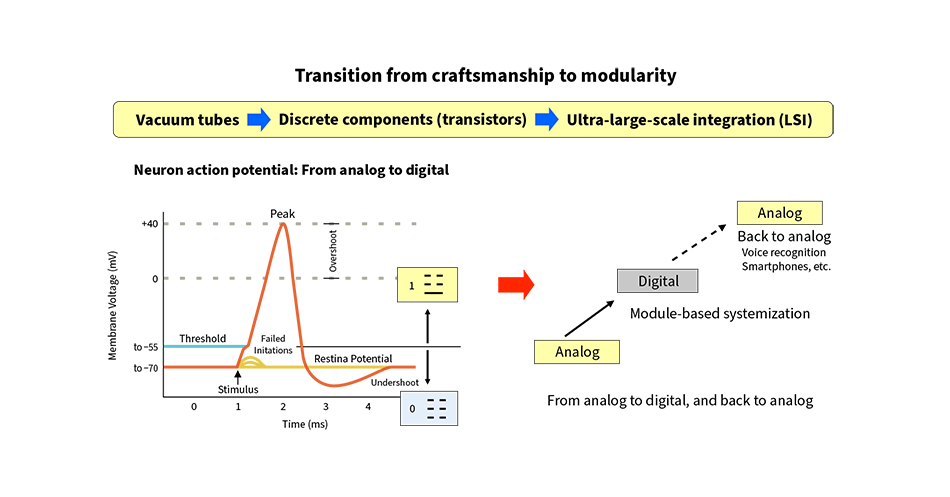

Figure 3—High-volume Production Brought about by Binary Logic (Bits) and Module Printing

The sensory information fed into the brain is largely in analog form. As it passes through the synaptic connections between neurons, however, it is digitized by the threshold checking that occurs based on neuron action potential. After processing in digital form, it is then transformed back to analog before finally being output.

The sensory information fed into the brain is largely in analog form. As it passes through the synaptic connections between neurons, however, it is digitized by the threshold checking that occurs based on neuron action potential. After processing in digital form, it is then transformed back to analog before finally being output.

Whereas IT devices are typically digital, human beings are thought of as being archetypically analog. In reality, however, interaction between neurons is a mix of both analog and digital. Moreover, things are digital at a microscopic level but analog at the macro level. That is, being “digital” in itself is not what matters. While I have been told by people in the arts that art is fundamentally human and not digital, the digital characteristics of the pipe organs that play such a vital role in church music, for example, are there for all to see. The keyboard is a set of switches that control the flow of air to the pipes that resonate to generate sound. Water organs in Ancient Greece used water pressure to generate their air flow. Nowadays, they work by means of electrically driven valves that open or close to control the flow of air and have a keyboard of on/off switches just like a personal computer.

These instruments are not like a piano where the way you strike the keys influences the volume and timbre of the sound. Nevertheless, they are still majestic instruments that can conjure up great emotion in the human heart.

On the other hand, this type of misunderstanding can conversely lead to the engineer’s fallacy that everything can be handled by switches alone. Care is required as the term “digital” is a relative one and whether something appears digital or analog will depend on the level with which you are concerned.

Information Propagation by Ions in Solution: Underlying Mechanism by which People Think and Feel

It has become apparent to me in recent times that a single point of departure has led to human thought and action and underpins the continuity of society. Thinking solely in terms of the laws of physics, I feel it may answer the question of how it is that modern humanity has acquired scientific technology and culture.

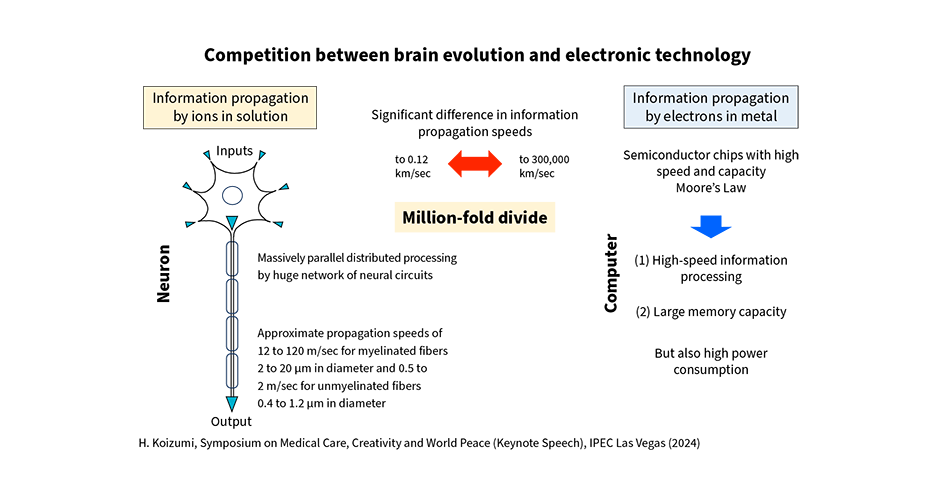

Whereas information propagation by neurons is accomplished by ions and fields in solution and by the diffusion of molecules, the electronic propagation of information happens at near light speed by means of free electrons and fields, more than a million times faster. As the evolution of animals got underway on the basis of its initial conditions, the end results of those initial conditions are an awareness of the world around us, the emergence of language, and the ability to contemplate the future.

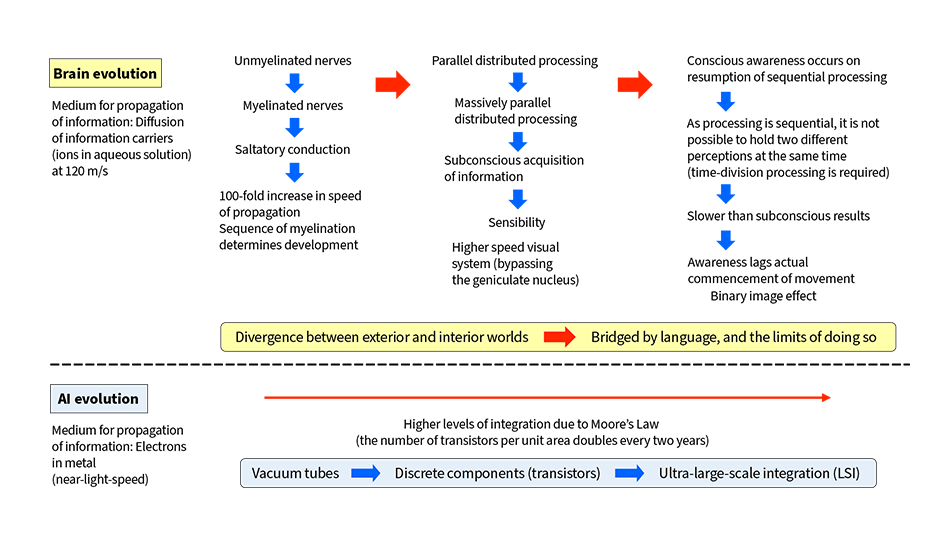

Figure 4—Speed Difference between Information Propagation by Ions in Solution Versus Electrons in Metal

Whereas the neural propagation of information occurs by means of ions in solution, information propagation in computers uses electrons in metal. This results in speeds that are more than a million times faster, a difference that definitively changes how information is processed in each case. It is also a difference that goes beyond the bounds of what is possible for biological evolution. Whereas myelinated fibers and saltatory conduction deliver an approximate 100-fold improvement over propagation via unmyelinated fibers, the only way to do any better than this is by parallel distributed processing. That is, it was the initial condition of low-speed information processing that determined which information processing techniques were subsequently adopted.

Whereas the neural propagation of information occurs by means of ions in solution, information propagation in computers uses electrons in metal. This results in speeds that are more than a million times faster, a difference that definitively changes how information is processed in each case. It is also a difference that goes beyond the bounds of what is possible for biological evolution. Whereas myelinated fibers and saltatory conduction deliver an approximate 100-fold improvement over propagation via unmyelinated fibers, the only way to do any better than this is by parallel distributed processing. That is, it was the initial condition of low-speed information processing that determined which information processing techniques were subsequently adopted.

AI has made rapid progress in recent years. Since it is called artificial, it is the case that it falls under the category of biomimetic scientific techniques in that it mimics human neural circuitry. As such, the progress of AI itself constitutes a constructive approach that can enable modern humanity to acquire even deeper understanding. As a technology that has gained comprehensive public attention and entered practical use, its progress also constitutes a study into human thinking and perception.

Being slower by a million times or more than what is possible with free electrons or fields, this speed of information propagation in solutions, including neurons, gives us a glimpse into the pathway by which biological evolution gave rise to modern humanity.

A World and Life Made of Matter and Information

In conventional cosmology, the universe takes shape as it cools from a state of matter at very high temperature (that is, it is entropy that gives the universe its form). Elementary particles give rise to ions, atoms, and molecules, and from these nebulas, stars, and planets progressively emerge.

The Earth belongs to a solar system that in turn is part of a galaxy, with its physical existence as a planet said to go back about five billion years and likely to last another five billion more.

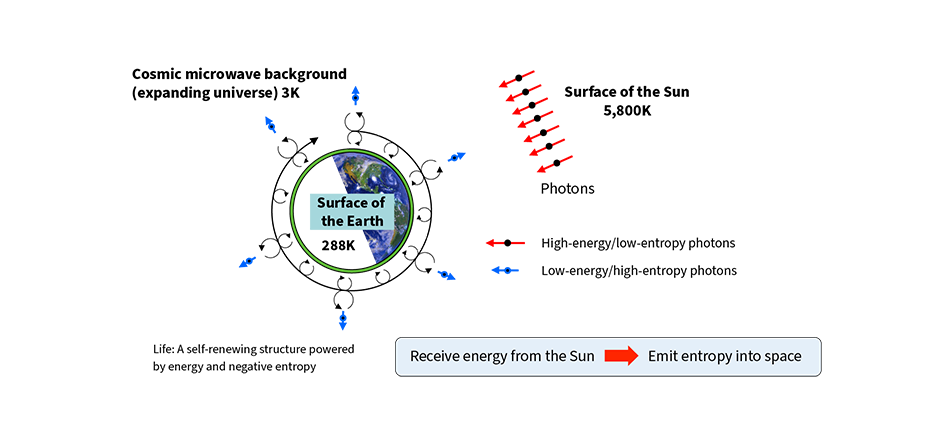

In principle, the first and second laws of thermodynamics (the law of conservation of energy and that entropy always increases) apply universally. As energy was shown by Albert Einstein’s (1879-1955) equation to be equivalent to mass, the total mass of a system also remains unchanged (the law of conservation of mass). The natural energy received by the Earth is due to the light from the Sun (photons). As the surface of the Sun is very hot, with an absolute temperature of 5,800 K, it emits photons with high energy and low entropy. The energy of these photons is absorbed by the surface of the Earth and this powers the entire heat engine whereby the increase in entropy drives the pump that is the hydrologic cycle and radiates into the cold of space (which has an absolute temperature of 3 K). That is, it operates as a huge heat engine that enables the movement of all living organisms, which are themselves heat engines.

Figure 5—Earth’s Biosphere as a Heat Engine: Link between Hydrologic Cycle and Biological Metabolism

The Earth’s biosphere receives high-energy/low-entropy photons from the hot (5,800 K) surface of the Sun and emits low-energy/high-entropy photons back into the cold (3 K) of space. An air-cooled gasoline engine operates as an efficient heat engine by utilizing combustion to generate high temperatures and an air-cooling fin to discharge the waste heat into the cool external atmosphere. The Earth’s biosphere likewise operates as a huge heat engine based on the differential between the Sun and space. Linked to this heat engine are numerous living organisms, each one itself a small heat engine. This maintains a state of homeostasis that allows life to live.

The Earth’s biosphere receives high-energy/low-entropy photons from the hot (5,800 K) surface of the Sun and emits low-energy/high-entropy photons back into the cold (3 K) of space. An air-cooled gasoline engine operates as an efficient heat engine by utilizing combustion to generate high temperatures and an air-cooling fin to discharge the waste heat into the cool external atmosphere. The Earth’s biosphere likewise operates as a huge heat engine based on the differential between the Sun and space. Linked to this heat engine are numerous living organisms, each one itself a small heat engine. This maintains a state of homeostasis that allows life to live.

In the primordial atmosphere of 3.8 billion years ago that was composed mainly of carbon dioxide, cyanobacteria emerged that used the energy of photons from the Sun and the entropy of water molecules to perform oxygen-generating photosynthesis. These multiplied in large numbers during the earliest epoch of life, creating an atmosphere that also contained oxygen through the fixation of carbon over the billions of years that followed. Chlorophyll-containing cells became incorporated into plant cells in a symbiotic arrangement that enabled plants to obtain energy directly from sunlight. Animals sustain themselves by eating these plants, and life itself is likewise sustained as these animals are themselves part of a food chain of the eaters and the eaten. Modern humans are not excluded from this process. This is because our lives, too, are sustained by the operation of a heat engine that processes energy and entropy. For example, water and fertilizer are needed for the flowers we grow in our homes. Both are important, but whereas a lack of fertilizer will result in a gradual deterioration, flowers will soon wilt if not watered. This is because the water absorbed by a plant is needed for the chemical reactions in the plant to proceed as they should, with the transpiration of large numbers of water molecules acting to cool the plant before carbon fixation can happen. While there is little literature on the topic, there are even cases where more water molecules are involved in transpiration than are used for energy metabolism. If you take away the air-cooling fin from an engine, the engine will not fail due to heat but rather the buildup of entropy will prevent it from outputting power. To see this, take a look at the photograph of an air-cooling fin that featured in my explanation of an ordinary internal combustion engine in Figure 3 of Article 1 in Part 2.

Another way of looking at it is that the processing of information is an extrapolation of the processing of entropy. The equation used in Claude Elwood Shannon’s (1916-2001) definition of information is isomorphic (equivalent) to the equation for entropy in thermodynamics and statistical mechanics.

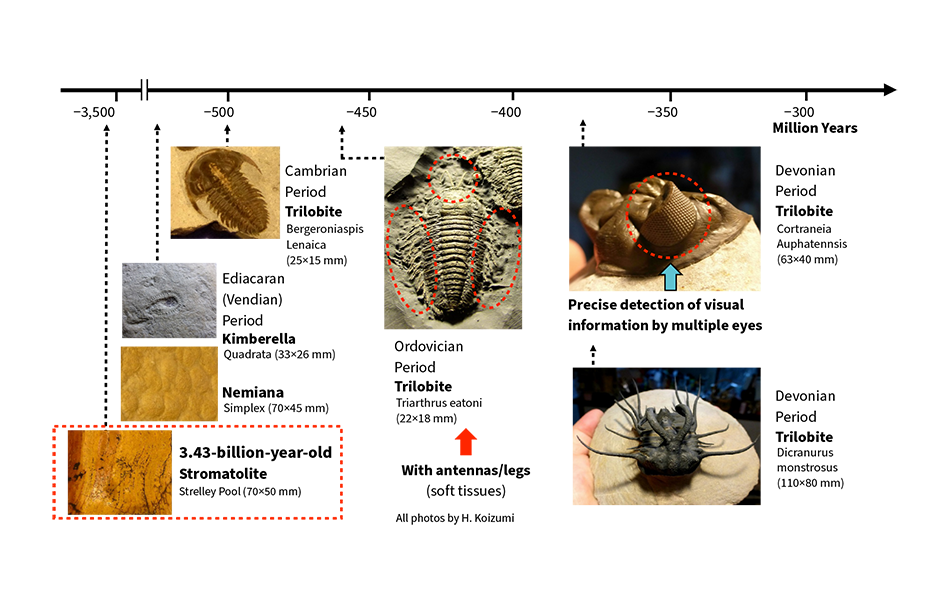

Figure 6—Fossils of Paleozoic and Earlier Organisms

When you look at evolution in terms of physics, it brings to light the principles of displacement and speed due to movement that are difficult to address using conventional paleontology. While the Ediacaran (Vendian) period that predates the Cambrian has been characterized as a time of calm that lacked predators, the discovery of the Kimberella trace fossils in recent years has shown that organisms did engage in predation. This concept of predators seems to have a longer history on the geological time scale than we had anticipated. On the other hand, the concept also shows itself in today’s war-ridden politics.

When you look at evolution in terms of physics, it brings to light the principles of displacement and speed due to movement that are difficult to address using conventional paleontology. While the Ediacaran (Vendian) period that predates the Cambrian has been characterized as a time of calm that lacked predators, the discovery of the Kimberella trace fossils in recent years has shown that organisms did engage in predation. This concept of predators seems to have a longer history on the geological time scale than we had anticipated. On the other hand, the concept also shows itself in today’s war-ridden politics.

Most of the phyla that exist today originated during the Cambrian, and the functions that organisms use to move have their origins in the period. To give one example, the role that mechanical impedance plays in evolution will be an important field of future study.

Paralympic competitions featuring competitors with prosthetics have already started breaking records that surpass those of the ordinary Olympics. Although prosthetics lack muscles and so do not contribute kinetic energy, improvements in mechanical impedance relative to the ground can confer superior movement capabilities. (Whereas the male long jump world record in the T64 class for athletes with prosthetics and functional impairments is 8 m 72 cm, the winning jump at the 2021 Tokyo Olympics was only 8 m 41 cm.)

What is Modern-day Generative AI?

In 2023, when generative AI was having a major impact on society, the Engineering Ethics and Education for Human Security and Well-being Project of the policy recommendation committee at the Engineering Academy of Japan (EAJ) co-hosted a symposium with the Research Center for Advanced Science and Technology (RCAST) at the University of Tokyo and invited Yutaka Matsuo, a professor at the university who has a long history of work on AI research.

Held on December 15, 2023, the discussions at the symposium reached the following understanding of the current state of AI.

The basic principle of AI is the progressive modification of the synapse weightings in a circuitry that mimics the neural network of the brain. Deep learning, meanwhile, extends this by adding additional layers and back-propagating the weightings and began to make rapid progress from around 2018.

Generative AI commonly refers to techniques such as large language models (LLMs) that, given a text, work by progressively predicting what the next word will be based on the data on which the model has been trained, and diffusion models that generate images by adding and eliminating noise. The attention mechanism also seen in human beings is frequently used in AI processing. In the case of LLMs, what has allowed generative AI to enter widespread use is the outcome, unexpected in statistical terms, that accuracy can be improved by increasing the size of the model (number of parameters) and the number of semiconductor chips, which are called graphics processing units (GPUs). According to material prepared by Professor Yutaka Matsuo, GPT-3 was trained on 400 billion words of text from the web, has 175 billion parameters, and cost in the region of several hundred million to several billion yen to train*2.

- *2

- The above information was adapted by the author from a presentation by Professor Yutaka Matsuo. For a more authoritative account, please refer to the professor’s published works.

Trial and Error Approach to Neuromorphic Computer

While physics has remained my mainstay, the practical deployment of measurement techniques that I have established has also led to my studying environmental science, clinical medicine, medical sciences (including the study of the mind), and neuroscience. Working in neuroscience also inevitably leads to an involvement with AI. In the 1990s, Professor Gen Matsumoto [who transferred from the Electrotechnical Laboratory to Institute of Physical and Chemical Research (Riken)] was one of the true leaders in the field. I’d like to mention a research and development result regarding a neuromorphic chip, which Professor Matsumoto noted was something he had always wanted to create. This is because I want to take the information I write about from primary sources and those active in the field.

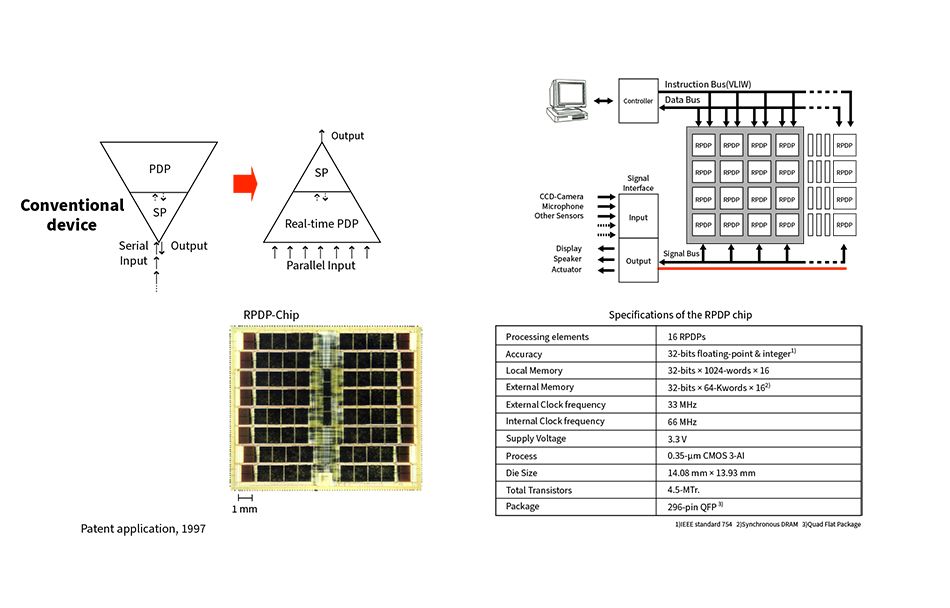

The world’s first neuro-computer product was built in the 1980s by what was then Hitachi Microcomputer System, Ltd.*3. Looking back now, this inaugural product was probably ahead of its time. The range of applications where it could have a significant impact turned out to be very narrow and it was abandoned. Nevertheless, a core of people who had retained their enthusiasm for the product got back together and developed this chip I am describing here. Following a recent brief discussion I had with Professor Takeo Kanade of Carnegie Mellon University, which happened in 2025 after I was already at work on this article, I suspect there could be value in revisiting the device as a new type of chip for use in robots at this time of change in robot training practices (physics engines).

- *3

- Hitachi Microcomputer System, Ltd. started out as Hitachi Microcomputer Engineering Co., Ltd. in 1980. Following a series of restructurings, it has since 2019 been known as Hitachi Solutions Technology, Ltd. The company got its start in the early days of AI and its progress has been an example of the turns and twists that happen prior to the flowering of a new technology like AI.

Figure 7—Neuromorphic RPDP Chip Architecture and Prototype Results (around 1996)

RPDP: real-time parallel distributing processor

RPDP: real-time parallel distributing processor

VLIW: very long instruction word

SIMD: single instruction stream/multiple data streamAs neuro-computers at that time were used as accelerators in conventional von Neumann architecture (serial processing) computers, they could not deliver the high-speed performance demanded by applications such as future robotics where real-time processing is needed, as shown in the top-left of Figure 7. The architecture of the neuromorphic chip featured an additional dedicated bus for continuous input and output.

The optical topography technique developed by Hitachi’s Central Research Laboratory in 1995 was commercialized by what was then Hitachi Medical Corporation. The full-head version has 120 channels and provides video that shows changes in the cerebral cortex and the distribution of active areas. We even tried to use the video to train an AI so that it could verbalize what a subject is thinking*4.

*4 H. Koizumi et al., “Dynamic optical topography and the real-time PDP chip: An analytical and synthetical approach to higher-order brain functions,” The Fifth International Conference on Neural Information Processing, 337-340 (1998)

The same journal also carried a paper by Dr. Shun'ichi Amari, a researcher who proposed pioneering concepts in the field of information geometry long before the Nobel Prize in Physics was awarded for work on AI in October 2024.

The world’s fastest supercomputer (based on the TOP500 rankings) in 1996, about 30 years ago, was the CP-PAX at the University of Tsukuba (made by Hitachi). Using the performance of this supercomputer as a benchmark, he designed what was then the fastest and highest density neuromorphic device (the RPDP chip) in collaboration with Hitachi Microcomputer System, Ltd. and had it fabricated at Hitachi’s Musashi Works. The speed of the chip for real-world tasks was compared with the latest supercomputer at the University of Tsukuba. The system was also equipped with two buses so that it could simultaneously handle both input from the outside world and the results of neuromorphic chip processing. The performance of Japan’s supercomputers in the 1990s was world-class, as was its semiconductor manufacturing technology*5.

*5 The Hitachi SR2201 supplied to the University of Tokyo held top place in the TOP500 rankings for supercomputer computing speed in June 1996 and the QCD-PAX jointly developed by the University of Tsukuba and Hitachi held this position in November 1996.

Human Artifacts and Artificial Intelligence

Physics can give us a glimpse into certain aspects of consciousness and the nature of language for humans living in nature.

Right now, very major changes in the history of the world are taking place over a short period of time. We have now entered the geological period of the Anthropocene, a time in which humans have a direct impact on the world. While the numbers have yet to be confirmed, it seems highly likely that the total weight of all human-made objects (human artifacts) now exceeds that of all living things on Earth*6.

- *6

- E. Elhacham et al., “Global human-made mass exceeds all living biomass,” Nature, 588, 442-444 (2020)

Meanwhile, the field of information processing has in some areas surpassed the brain function of modern humans, Earth’s most highly evolved species. Regarding the concept of artificial intelligence, the term “AI” comes from the 1956 Dartmouth Summer Research Project attended by Marvin Minsky (1927-2016) and others and derives from a new concept for information processing machines devised in 1950 by Alan Mathison Turing (1912-1954). In a paper published in 2025, the latest ChatGPT*7 model achieved more than 80% on the Turing test. I had an opportunity to talk with Professor Minsky many years ago and found that he was an exemplar of trans-disciplinarity with an approach to thinking that transcended specific fields.

Biological evolution involves the transformation of water-based life forms, with aqueous solutions providing the basis for the propagation of information. That is, the transmission of nerve signals occurs by means of ions in aqueous solution, a method that even in the fastest motor neurons clocks in at only 120 m/sec. Man-made information propagation, in contrast, takes place by means of free electrons in metals and is more than a million times faster, operating at speeds close to the speed of light. As far as we know, there is no possible way in which biological evolution could overcome this million-fold divide (although we cannot entirely rule out the possibility that it could be achieved by quantum effects).

Accordingly, modern-day computers are overwhelmingly superior at the rapid recording of large amounts of information, even using current semiconductor devices. Whether humans can go on using such computers indefinitely is an open question.

Physics has now reached a point where it is reassessing the basis of human cognition itself, treating it as something that falls within the scope of the field.

- *7

- ChatGPT and GPT-4 are trademarks or register trademarks of OpenAI OpCo, LLC in the USA.

Relationship between Modern Humanity and Artificial Intelligence

Information is transmitted by nerves in humans and by wires in computers. As noted above, these operate at very different transmission speeds, differing by a factor of more than one million. For this reason, the human brain has evolved as a concurrent processing system that uses parallel distributed processing to handle large amounts of information. All of the perceptual information we acquire through our senses is immediately split into its elements, which are processed separately and concurrently. Our conscious awareness of this information only arises after these elements are merged back together and processing becomes sequential once more. The core operation of artificial intelligence closely mimics parallel distributed processing in humans. To reiterate, the key feature of computers is their speed of information transmission.

While the general form taken by the circuitry of the modern human brain is determined by genes, just as how environmental adaptation works, the basic structure of the brain also incorporates very many elements from the environment so that it can be optimized based on actual conditions. As there is a critical period during infancy in particular, the environment during this time is of great importance.

A model of the outside world is constructed in the brain’s inner world. This is done by acquiring information about the outside world through the senses, breaking this down into its elements, performing parallel distributed processing, and then reintegrating it. Because of individual differences in how this happens, there will be subtle differences between what each of us see as being the true outside world. Instead, the process of evolution has equipped us with symbols and language so that we can all perceive the world in the same way. These symbols and languages have become vital for providing a degree of conceptual uniformity in what we see and feel. As was noted very early on by people such as the linguist Ferdinand de Saussure (1857-1913), words are often quite arbitrary. One extreme example is how it is possible to say or write sentences that are grammatically perfect but which make no sense at all. This makes it easy to produce fake news. What this characteristic of language makes clear is how easy it is for social media filters that are determined by people’s preferences to create a situation in which the worlds of different groups become disconnected from each other. I believe this is one of the main reasons behind the divisiveness of modern times.

A New Approach to Trans-disciplinarity

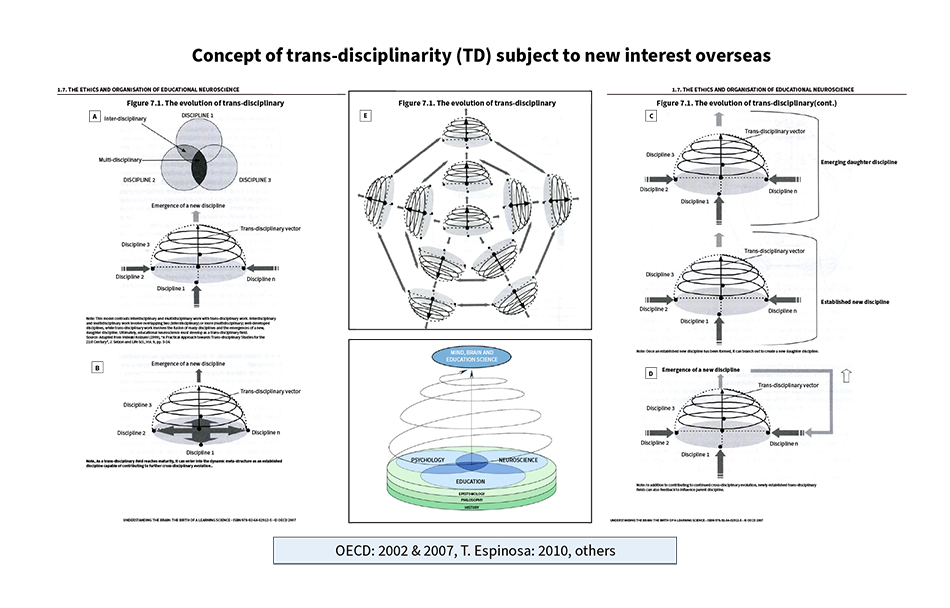

As noted near the beginning of this article, I believe that trans-disciplinarity has a direct bearing on research and development methodologies for linking and combining IT, OT, and practical deployment. I have already presented this concept in Figure 4 and Figure 5 in Article 3 of Part 1. Furthermore, my views on the importance of trans-disciplinarity are in close agreement with those of the philosopher Professor Markus Gabriel of the University of Bonn. The topic is also treated in detail in a white paper published by the Organisation for Economic Co-operation and Development (OECD). Putting this methodology into practice requires the process shown below.

Figure 8—Trans-disciplinarity

A concept that initially came out of Japan, trans-disciplinarity has also been a topic of considerable debate overseas, to the extent that the term is now frequently used in Europe and America. Three cases are listed below.

A concept that initially came out of Japan, trans-disciplinarity has also been a topic of considerable debate overseas, to the extent that the term is now frequently used in Europe and America. Three cases are listed below.

(1) Link and combine numerous different fields to devise new science and technology and to develop new industries.

(2) Link and combine science and technology with the humanities and social science to develop new fields of study.

(3) Link and combine research with practical applications (tacit knowledge and formal knowledge)

The overseas debate arose out of the concept depicted in Figure A on the top-left. The OECD figure includes the annotation, “Source: Adapted from Hideaki Koizumi (1999), “A Practical Approach towards Trans-disciplinary Studies for the 21st Century”, J. Seizon and Life Sci., Vol. 9, pp.5-24.” A Japanese version is available in Figure 7.1 “The Evolution of Trans-disciplinary Studies” (p.211-213) in “Understanding the Brain: The Birth of a Learning Science” from the OECD Centre for Educational Research and Innovation (for this version, editorial supervision was provided by Hideaki Koizumi and it was translated by Maki Koyama, et al.).

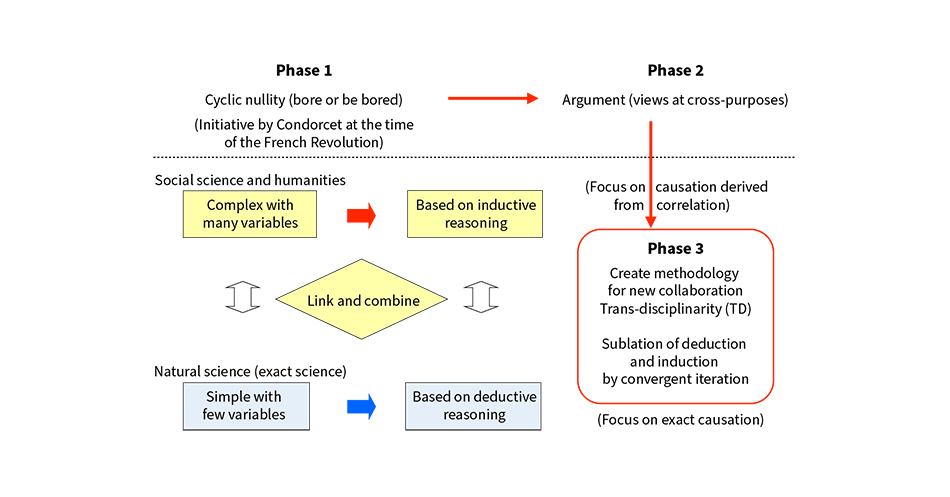

Historically, similar situations where little actual progress is being made despite the importance of innovation being asserted everywhere have recurred numerous times since the French Revolution.

At the time of the French Revolution, Marie Jean Antoine Nicolas de Caritat, Marquis de Condorcet (1743-1794) took republican ideas beyond the political system, arguing also that republican structures should be adopted in academia to break down the divisions that separate finely demarcated academic disciplines. He also made the point that people from widely separated fields find it very difficult to engage in meaningful debate. The much commented on problem of academia being split into silos is nothing new. When you put the fields of natural science and technology together with philosophy and ethics from the humanities, or even with the social sciences, obtaining clear results is near impossible.

Likewise in national projects, transcending the barriers between finely demarcated academic disciplines and technologies poses the greatest challenge in cases such as when creating new fields. I believe that the same can be said of business management. It is only by linking and combining different areas that we will be able to deliver innovation.

Abductive Reasoning and Generative AI

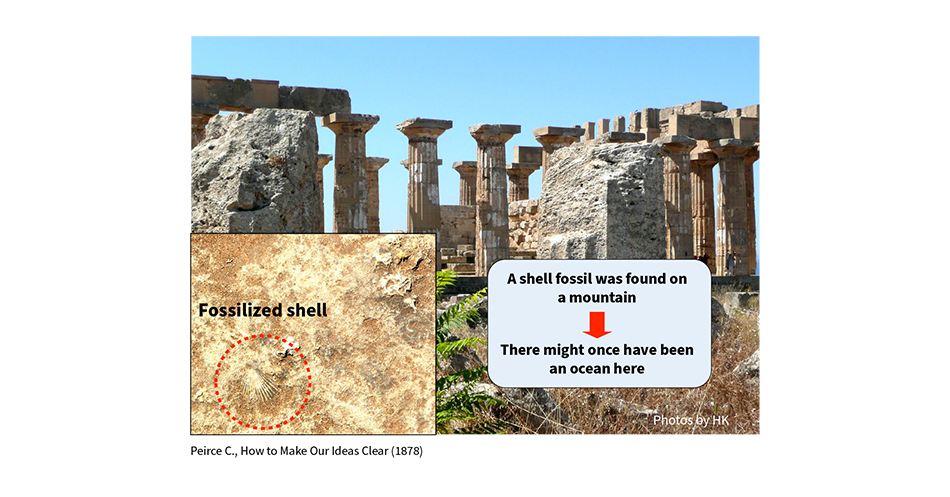

Abductive reasoning is a concept put forward by the American philosopher of pragmatism, Charles Sanders Peirce (1839-1914).

Figure 9 uses a familiar example to explain the concept. A radical international conference has been held annually in Sicily at a venue located not far from the ruins of an ancient Greek colony. If you take a close look at the pillars of its hilltop temple, you will find many fossilized shells. How could the fossils of sea-dwelling shellfish come to be atop a hill?

One hypothesis that quickly comes to mind is that the hill was formed by the uplift of what was once seabed. Another hypothesis is that the pillar was carved from the nearby seashore and it is possible that it has been transported over a distance. Unfortunately, while this hypothesizing is useful, it does not constitute reasoning.

As AI becomes more commonplace, it will become possible for humans to devise hypotheses from their experience or intuition and have AIs test them in a variety of ways. This has the potential to be helpful both in science and in business.

Figure 9—Abductive Reasoning (Abduction)

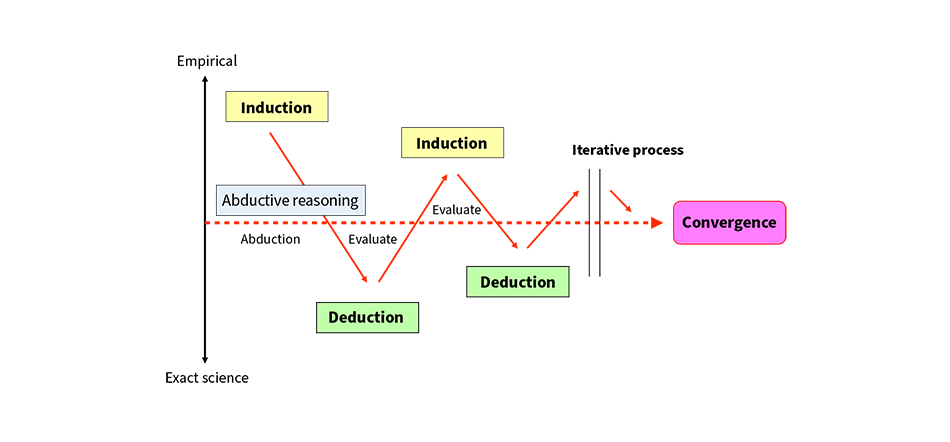

The interdisciplinary research and development methodology demonstrates that testing hypotheses in a convergent manner that alternates between deductive and inductive approaches is effective in bringing the natural sciences and technology together with social science and the humanities and homing in on conclusions.

The interdisciplinary research and development methodology demonstrates that testing hypotheses in a convergent manner that alternates between deductive and inductive approaches is effective in bringing the natural sciences and technology together with social science and the humanities and homing in on conclusions.

Figure 10 and Figure 11 show how this works.

The interdisciplinary research and development methodology demonstrates that testing hypotheses in a convergent manner that alternates between deductive and inductive approaches is effective in bringing the natural sciences and technology together with social science and the humanities and homing in on conclusions.

Figure 10 and Figure 11 show how this works.

Figure 10—Methodology that Links and Combines the Natural Sciences with Social Science and the Humanities (Used in Major National Project)

People working in the natural sciences or engineering tend to make their arguments by means of deductive logic. The problems they deal with are often simple with few variables, belonging to the exact sciences where cause-and-effect relationships hold true. Unfortunately, there is little in social science and the humanities that is amenable to treatment as an exact science. Rather, these are fields where inductive practices tend to predominate, with the multi-variable nature of the problems they grapple with frequently making a deductive approach difficult. This is likely to give rise to the “cyclic nullity” identified by Condorcet, who had a republican vision for academia at the time of the French Revolution. While a degree of mutual communication can be made possible by adopting measures such as agreeing in advance on accurate terminology and bringing together small discussion groups made up of people from adjacent branches of academia, what often happens in practice is that this develops into disputes that border on brawling. The cause of this frequently lies in the cultural differences between academics in relation to deduction and induction. The solution is to have the deductionist group take the problem as far as it will go using deductive methods and then have the inductionist group go as far as they can using inductive methods. In this way, each group can learn their own limits, thereby diminishing emotive debate. This process of converging on a solution by alternating between deduction and induction is important for research and development in cross-disciplinary fields. The methodology proved extremely useful in the conduct of a strict birth cohort study (forward-directed longitudinal study) undertaken as part of a major national project.

People working in the natural sciences or engineering tend to make their arguments by means of deductive logic. The problems they deal with are often simple with few variables, belonging to the exact sciences where cause-and-effect relationships hold true. Unfortunately, there is little in social science and the humanities that is amenable to treatment as an exact science. Rather, these are fields where inductive practices tend to predominate, with the multi-variable nature of the problems they grapple with frequently making a deductive approach difficult. This is likely to give rise to the “cyclic nullity” identified by Condorcet, who had a republican vision for academia at the time of the French Revolution. While a degree of mutual communication can be made possible by adopting measures such as agreeing in advance on accurate terminology and bringing together small discussion groups made up of people from adjacent branches of academia, what often happens in practice is that this develops into disputes that border on brawling. The cause of this frequently lies in the cultural differences between academics in relation to deduction and induction. The solution is to have the deductionist group take the problem as far as it will go using deductive methods and then have the inductionist group go as far as they can using inductive methods. In this way, each group can learn their own limits, thereby diminishing emotive debate. This process of converging on a solution by alternating between deduction and induction is important for research and development in cross-disciplinary fields. The methodology proved extremely useful in the conduct of a strict birth cohort study (forward-directed longitudinal study) undertaken as part of a major national project.

Figure 11—Converges on a Conclusion by Abductive Reasoning Using Deductive and Inductive Argument

This shows a domain overview of what was learned during the approximately 10 years of activity (including the periods before and after) of Brain-Science and Society, a trans-disciplinary project run by the Ministry of Education, Culture, Sports, Science and Technology and the Japan Science and Technology Agency (JST). As shown in Figure 10, trans-disciplinary collaboration is very difficult to achieve when dealing with fields that are widely separated from one another. Even when collaboration gets underway in practice, there are numerous obstacles to be overcome. A range of measures are especially necessary when the collaboration is between the sciences and the humanities. Generally, the sciences emphasize reason, addressing problems in terms of exact science and using deductive reasoning to identify cause-and-effect relationships. Typically, however, this is only viable in cases where the number of parameters being dealt with is low. The humanities, in contrast, tend to deal with complex societies and a large number of parameters. There are also frequent instances of “historicity” whereby events that occur by chance determine subsequent outcomes (I will write more about historicity and universality in a subsequent article). In many cases, it is more realistic to infer cause-and-effect relationships inductively rather than through logic. A practical methodology is to clearly demarcate which issues are amenable to deduction and which to induction while using Peirce’s abductive reasoning to show the way forward and applying it in a convergent manner.

This shows a domain overview of what was learned during the approximately 10 years of activity (including the periods before and after) of Brain-Science and Society, a trans-disciplinary project run by the Ministry of Education, Culture, Sports, Science and Technology and the Japan Science and Technology Agency (JST). As shown in Figure 10, trans-disciplinary collaboration is very difficult to achieve when dealing with fields that are widely separated from one another. Even when collaboration gets underway in practice, there are numerous obstacles to be overcome. A range of measures are especially necessary when the collaboration is between the sciences and the humanities. Generally, the sciences emphasize reason, addressing problems in terms of exact science and using deductive reasoning to identify cause-and-effect relationships. Typically, however, this is only viable in cases where the number of parameters being dealt with is low. The humanities, in contrast, tend to deal with complex societies and a large number of parameters. There are also frequent instances of “historicity” whereby events that occur by chance determine subsequent outcomes (I will write more about historicity and universality in a subsequent article). In many cases, it is more realistic to infer cause-and-effect relationships inductively rather than through logic. A practical methodology is to clearly demarcate which issues are amenable to deduction and which to induction while using Peirce’s abductive reasoning to show the way forward and applying it in a convergent manner.

While direct problems (ones in which effects are inferred from causes) are common in the analysis process, the process as a whole will also include inverse problems (ones in which causes are inferred from effects). There are cases where inverse problems do not have a solution. A practical methodology for trans-disciplinary collaboration is posted on the JST website in the form of a six-part interview. The following link is to the sixth of these interviews.

Part 6 Toward Science and Technology for Human Security and Well-Being

Undine Hypothesis

Frédéric François Chopin’s (1810-1849) Ballade #3 was inspired by the epic poem Tale of Undine by the Polish poet Adan Mickiewicz (1798-1855). The piece has also been used in ballet.

While people have long acknowledged the connection between water and life, the more you study the world, the more its enormous scope and complexity become apparent. Meanwhile, at this time when quantum computers are becoming a reality, it feels as if attempts pioneered by Roger Penrose to use quantum physics to elucidate the mechanism of human consciousness are leading us toward a new point of departure*8.

- *8

- Roger Penrose, “The large, the small and the human mind,” Cambridge University Press, 1997

While water and life also have a deep connection in terms of energy and entropy, when looked at it in terms of the evolution of information propagation, it feels like further progress on elucidating brains and bodies is being made through the structured approach of generative AI. I would like to coin the term “undine problem” for the set of problems like this that, from the micro to the macro level, are tied together on a foundation of water, waves, and life. The word “undine” (“ondine” in French and “ondina” in Italian) derives from the Latin “unda” (wave) and refers to nymphs, spirits who are associated with water.

Figure 12—Undine Hypothesis: Life is the Propagation of Information Exclusively in Water

I will come back to discuss this hypothesis in the third article of Part 3.

Fundamental Ethics Problem of Generative AI

As I have discussed above, generative AI has advanced by using electronic means to process the language system that humans have acquired through evolution, doing so at speeds that evolution cannot hope to match. As a system, however, language can generate an infinite quantity of meaningless output while still maintaining perfect grammar. This is why I believe that serious consideration needs to be given to the ethics of AI development given its potential for being incompatible with humans continuing to live in a human way. One example would be when new dialogue is generated based on information from a person’s past life or when a record of this is retained on the Internet. When this is done as a business, there is a recognized need for in-depth consideration of the ethics involved. While the human participant in a dialogue may find it comforting, there is also the potential for them to suffer serious emotional harm. This is a problem that warrants urgent attention.

In this article, considering the evolution of modern humanity and of AI and other human artifacts has shed light on what next will be important for the future. Having arisen from a single cell, we human beings should not forget that we are in essence all distant relatives of one another and that our lives are sustained by water and sunlight. Modern humans were the first species to acquire a sophisticated empathy (warm-heartedness, or ethics), and rather than reverting back to being predators (or dictators) in the wild, I believe that we should make careful use of the artifacts we devise by filling our hearts with this empathy and always seeking coexistence between ourselves and others.