Acquisition and Use of Action Recognition Data at Automotive Manufacturing Plants

Highlight

While the acquisition of equipment data for purposes such as productivity improvement is well advanced in the manufacturing workplace, data on the actions of workers is difficult to collect and has been limited to visual observation. This is a major issue for the automotive industry, where assembly work has a direct impact on product quality. To enable the digitalization and use of this action recognition data, wearable sensors developed by Hitachi are being used to make semantic data (timestamped data on worker actions and circumstances, the work they are doing, and events) available for a range of purposes such as training or improvements in areas like quality, productivity, and safety, with this information being generated from sensing data such as pressures and accelerations. This article describes this work and its intended future applications.

Introduction

The operation of production lines like those used in automotive manufacturing can be broadly divided into fabrication and assembly work. Fabrication primarily involves the use of machines to form materials into the desired shape and this is an area where the automation of equipment and use of the Internet of Things (IoT) to make data available are well advanced. Assembly work, on the other hand, involves fitting parts together to produce the finished product. As much of it consists of the manual installation of a diverse variety of components, the number of operating procedures involved is huge. Given the limited means for the digital capture of data about production line workers, this is why little progress has been made on digitalization and the digital transformation (DX) of assembly work.

In response, Hitachi has been engaged in the research and development of solutions for diverse applications that use wearable sensors to generate action recognition data with a wide range of potential uses. This article gives an overview of software currently under development for making use of data from sensing devices using techniques such as artificial intelligence (AI) for conversion and output, and describes its intended future applications.

Overview of New Technology

This newly developed technology for the use of action recognition data provides information in real time in the form of semantic data suitable for a wide variety of purposes, including work recognition results (the worker’s current circumstances and what they are doing). The information is based on pressures, accelerations, and other data from sensing devices incorporated into gloves or other workwear that collect measurements from the person wearing them. Rather than being output as pressures or other raw data, this information is put through a recognition process, meaning it is in a form that customers can put to many different uses, including for training or for quality, productivity, and safety improvement. Hitachi is currently working with customers on proof-of-concept (PoC) trials in anticipation of practical applications and is preparing the software for commercial release.

Overview of Action Recognition Software

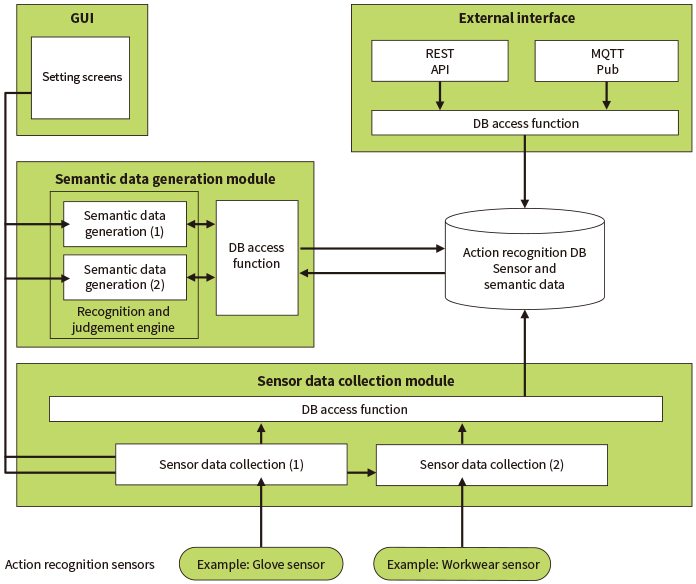

Figure 1—Flowchart of Action Recognition Software The action recognition software is made up of a sensor data collection module, semantic data generation module, action recognition DB, a GUI, and external interfaces.

The action recognition software is made up of a sensor data collection module, semantic data generation module, action recognition DB, a GUI, and external interfaces.

Figure 1 shows a flowchart of the action recognition software. It is made up of a sensor data collection module, action recognition database (DB), semantic data generation module, interfaces, and a graphical user interface (GUI).

Data from the sensing devices is initially sent to the sensor data collection module where it is processed and saved in the action recognition DB. The semantic data generation module retrieves sensing data from the DB, processes it into semantic data, and then saves it back in the DB. Both the sensing and semantic data in the DB can be accessed externally via a representational state transfer application programming interface (REST API) or output using the Message Queuing Telemetry Transport (MQTT) protocol for real-time use.

The sensor data collection module processes sensing data from the measurement devices. The different forms of sensing data are tagged with IDs to distinguish them from each other. A timestamp function is also included to track the timings of the different forms of sensing data.

The semantic data generation module generates semantic data from the sensing data and is described in detail below.

A standard in-memory database that supports real-time operation is used for the action recognition DB. The functional modules and external interface insert and retrieve the sensing data and semantic data via an internal interface called the DB access function.

The software also includes an external interface that provides for real-time output of the sensing and semantic data in the DB via a REST API and MQTT. This simplifies application development and provides the means for users to integrate and utilize the work recognition data.

Development

This section describes the technologies developed for use in the semantic data generation module, a core function of the action recognition software.

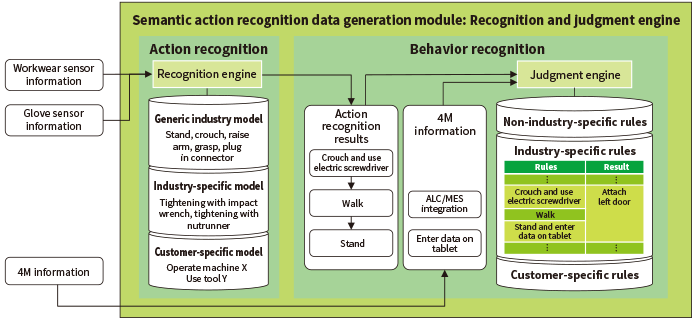

In addition to interpreting data from individual devices, semantic data generation is also able to perform recognition using a mix of data from multiple devices. The module is made up of an action recognition function that uses AI to identify individual actions, mainly from sensor data, and a behavior recognition function that utilizes the results of action recognition for rule-based recognition and works at the level of the workplace’s operating procedures (see Figure 2).

The action recognition function has separate functions for the continuous output of worker actions and for the output of event information. The former outputs data describing simple worker movements or circumstances that are only loosely connected to the task involved, such as grasping, turning, standing, or crouching. It works by supplying real-time pressure and gyroscope data together with skeletal posture information to a recognition and judgment engine that incorporates device-specific algorithms for semantic data generation. Event output, meanwhile, identifies discrete events such as “connector plugged in” from a combination of pressure and audio data. These functions were developed using a combination of rules-based judgment and machine learning and are currently undergoing further fine-tuning to improve accuracy. It is anticipated that they will be able to identify several dozen actions, circumstances, and events in the future.

While the recognition and judgement engine is suitable for use in a range of industries, algorithms for specific industries or customers are also available, allowing it to take advantage of the best possible recognition results. This works in much the same way that machine translation switches between topic-specific models. For automotive manufacturing, for example, it can run an additional recognition and judgment engine equipped with awareness of relevant tools and parts to generate recognition outputs such as “tightening with an impact wrench” or “tightening with a nutrunner.” It can acquire tool-specific vibration characteristics from accelerometers and use this alongside the data used for generic recognition when making judgments.

The behavior recognition function makes judgments about the results of action recognition using data for combinations that include things like the sequence in which the actions are performed, “human, machine, material, method” (4M) information from external sources, and scheduling and work instructions from the manufacturing execution system (MES). To allow users to specify these IF-THEN and other rules, the intention is to provide this capability in the GUI by using the Node-RED* open-source software.

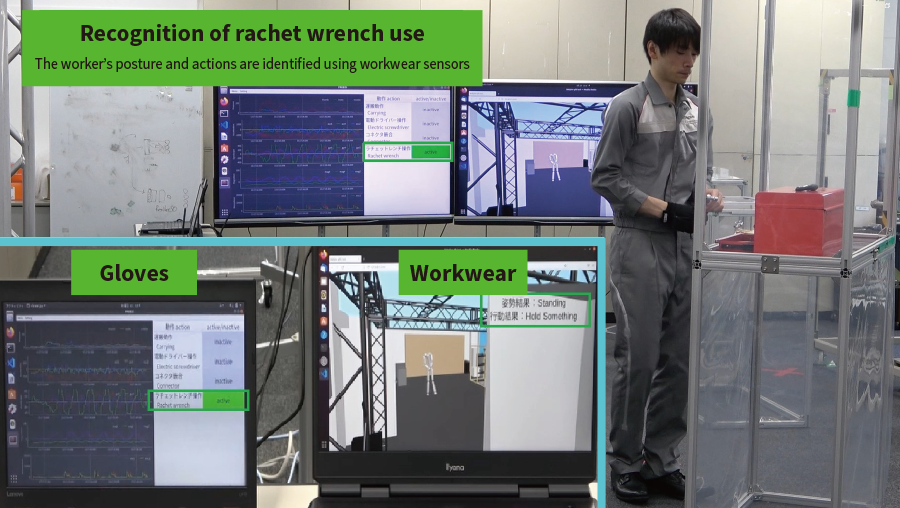

Current development work includes ongoing trials at customer sites aimed at improving recognition performance, with a demonstration video having been produced by using the system in a mockup of a customer workplace. This shows its ability to accurately identify specific actions (such as plugging in connectors or the use of an electric screwdriver) and repeated simulated actions (see Figure 3).

The wearable devices that generate the sensing data are intended to be worn routinely on production lines and other workplaces, not only during training or when collecting measurements. Hitachi is working with cooperating device vendors on improvements, including testing the use of its wearable devices on customer production lines.

Figure 2—Logical Structure of Semantic Data Generation Module The semantic data generation module identifies basic actions and situations. These results are then combined with 4M information from external sources to identify tasks at the level of the customer’s assembly operating procedures.

The semantic data generation module identifies basic actions and situations. These results are then combined with 4M information from external sources to identify tasks at the level of the customer’s assembly operating procedures.

Figure 3—Demo Screen Showing Task Recognition  The subject was asked to perform a simulated assembly task while wearing a glove sensor and workwear sensor. The photographs show the subject’s use of a rachet wrench being identified from glove sensor data, and their posture (standing) and action (holding something) being identified from workwear sensor data.

The subject was asked to perform a simulated assembly task while wearing a glove sensor and workwear sensor. The photographs show the subject’s use of a rachet wrench being identified from glove sensor data, and their posture (standing) and action (holding something) being identified from workwear sensor data.

Intended Future Applications

The system can be used on its own as a way to keep track of worker actions from pressure and other sensing data. However, it should be able to deliver even more value to customers when combined with IoT data and other process information held by the plant that relate to the identified actions. Possibilities include quality and productivity improvement, skills training, and health and safety applications. The following considers each of these in turn, indicating which industries could potentially benefit and giving examples of how the system might be used.

- Quality

For quality applications, it is anticipated that the system would be used primarily in manufacturing and applied to manual tasks performed on a production line. When the system is used to monitor the work being done by workers, it can detect actions that are unusual, differ from those of other workers, or are non-standard. This should help to reduce the risk of factory defects. - Productivity

As with quality, most productivity applications are in manufacturing and deal with manual tasks performed on a production line. Using the system to monitor the work being done by workers should provide the information needed to analyze how long it takes to perform each task and to decide on line improvements. - Training

Potential training applications include helping to upskill workers in manufacturing and services. When training new recruits, for example, it could be used to assess the skills of individual workers or to identify where to improve their work practices by comparing their actions with those of experienced workers. - Safety

For safety, the system could be applied to production line tasks or field maintenance and inspection work in industries such as manufacturing, services, or railways. When used to monitor the work and other actions of workers on a production line or engaged in maintenance inspections, the system could be applied to operational improvements or work procedures that enhance safety by using it to check for actions that are dangerous or cause overloads.

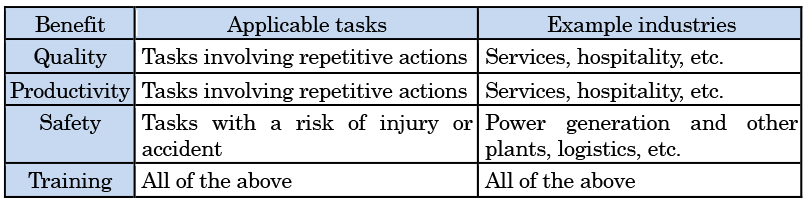

While Hitachi is currently considering the applications listed above, the system has considerable potential for use anywhere that people engage in physical actions. As Hitachi builds up use cases and operational data, other areas with the potential for future applications include services such as physical rehabilitation or nursing, hospitality, and the building industry, including public works and residential construction (see Table 1).

Table 1—Tasks for which System could be Used The table lists tasks and industries where the system could be put to use beyond the manufacturing sector, arranged by the benefits it can deliver.

The table lists tasks and industries where the system could be put to use beyond the manufacturing sector, arranged by the benefits it can deliver.

Conclusions

In addition to technical enhancements to things like task recognition performance and interoperation with devices other than the glove and workwear sensors, Hitachi is also planning to deploy this technology as a solution for use across the many different places where people work. The intention is to contribute to creating a human-centric society in a wide range of areas, utilizing the data it provides for purposes such as training and improvements to quality, productivity, and safety, not only in manufacturing, but also in areas such as warehouse work, fieldwork, nursing, and welfare.